Web crawling made easy for businesses.

Our platform is efficient, scalable, and user-friendly, transforming web crawling into an accessible art. Dive into data effortlessly, with Crawlab.

Trusted by these six companies so far

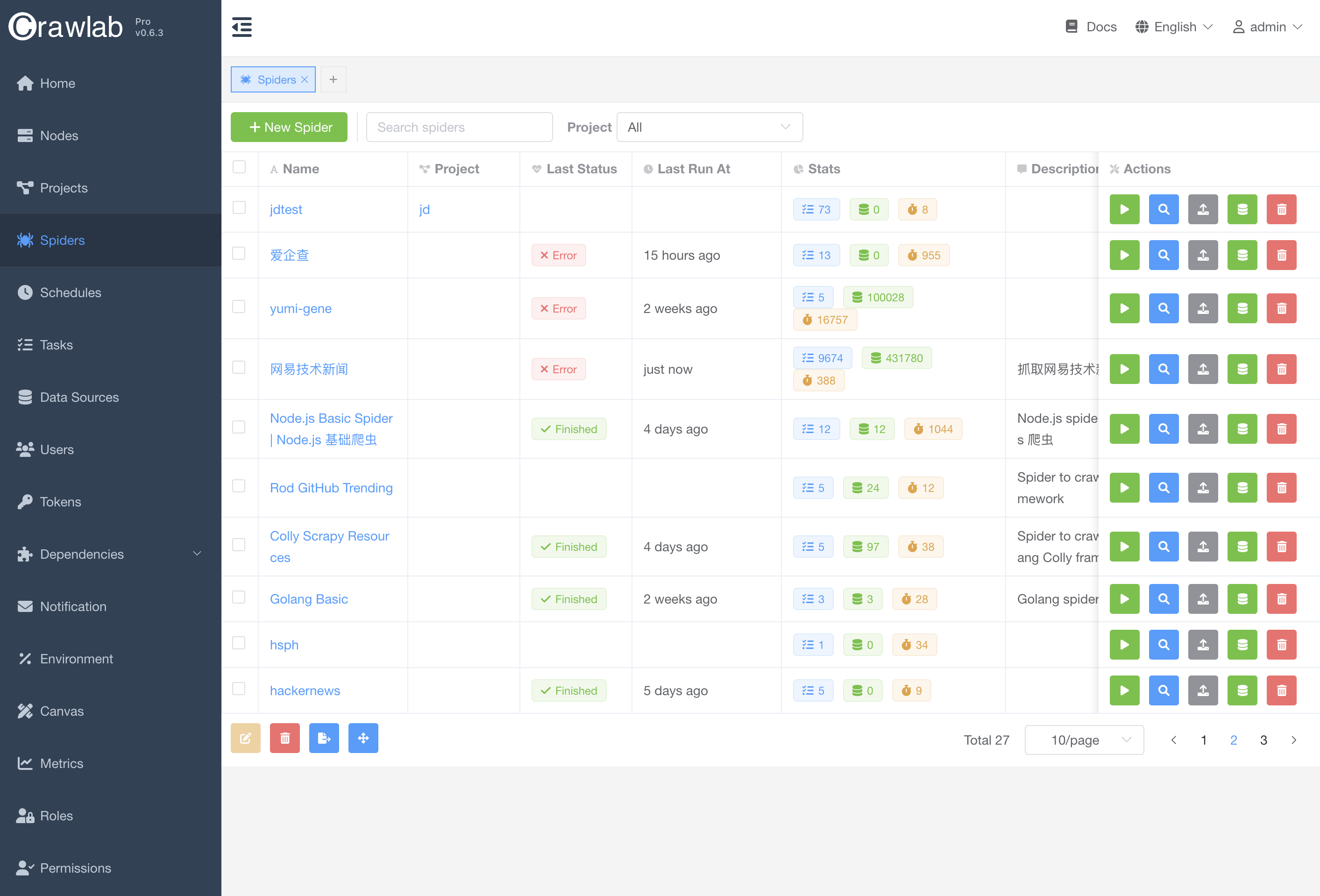

Everything you need to manage web crawlers.

Well everything you need if aren't confident about running web crawlers or spiders at scale.

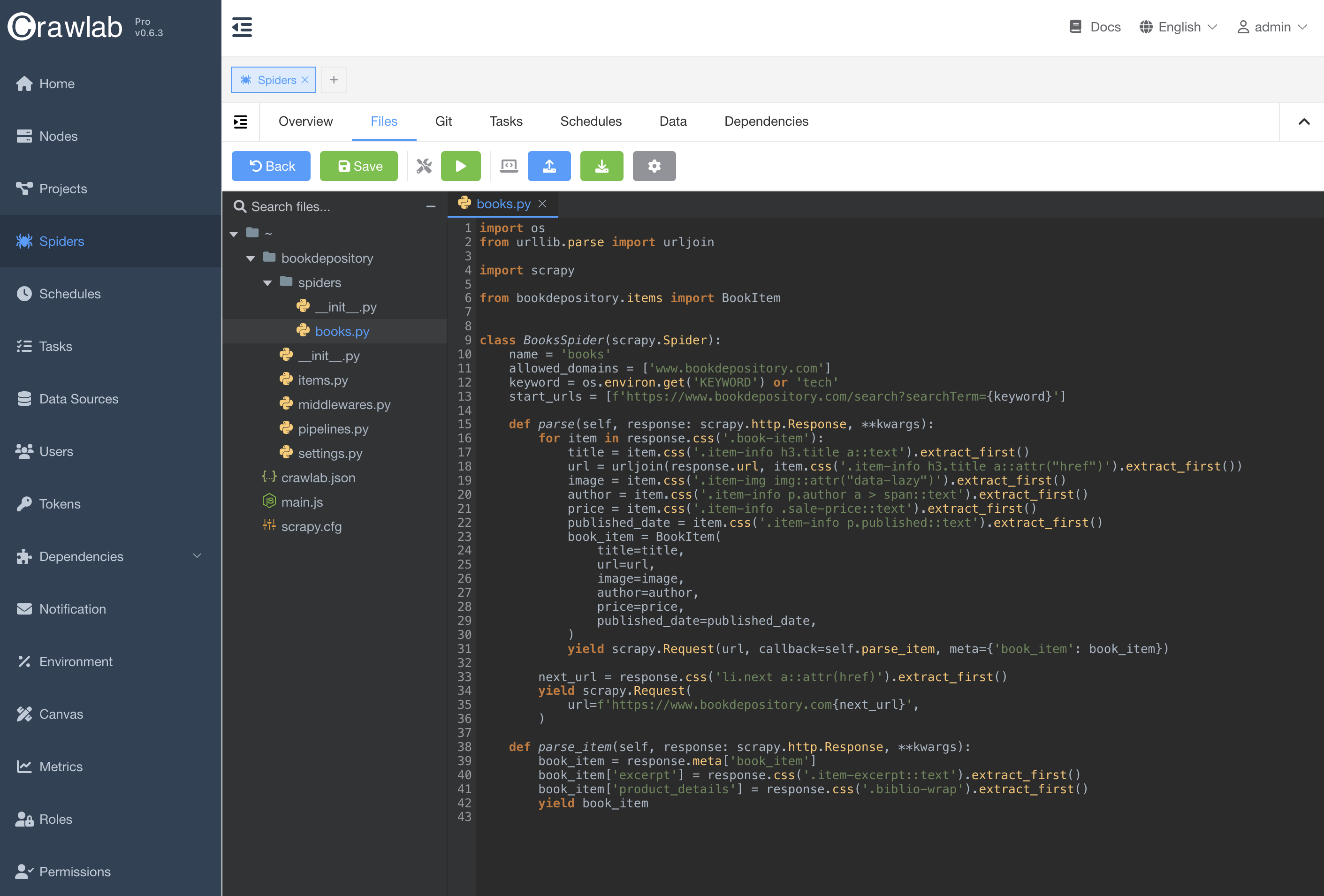

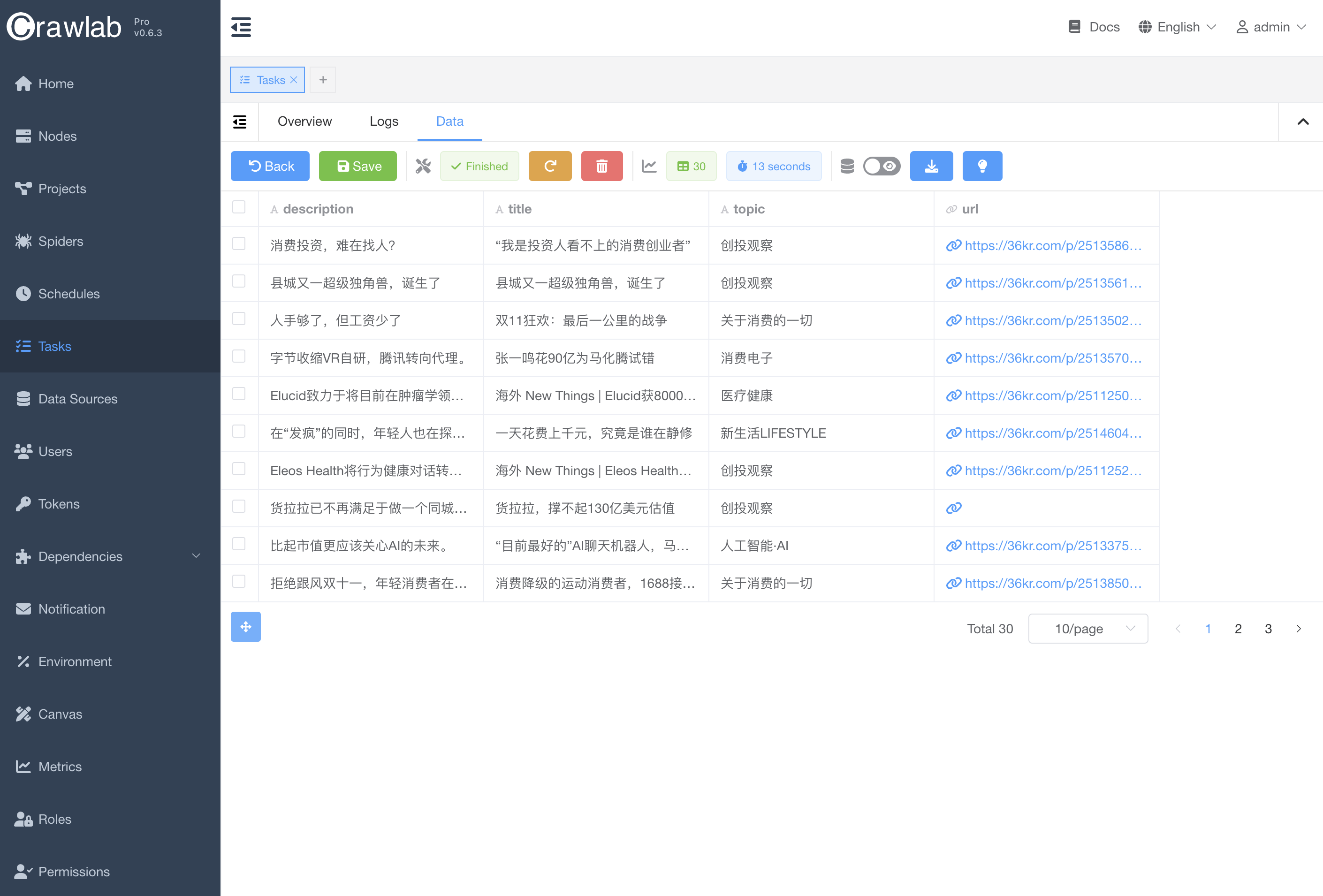

Users can customize spiders to target specific data, catering to diverse needs and applications, from market research to competitive analysis.

Simplify web crawling tasks.

Streamline your data journey: seamless web crawling for effortless insights and endless possibilities.

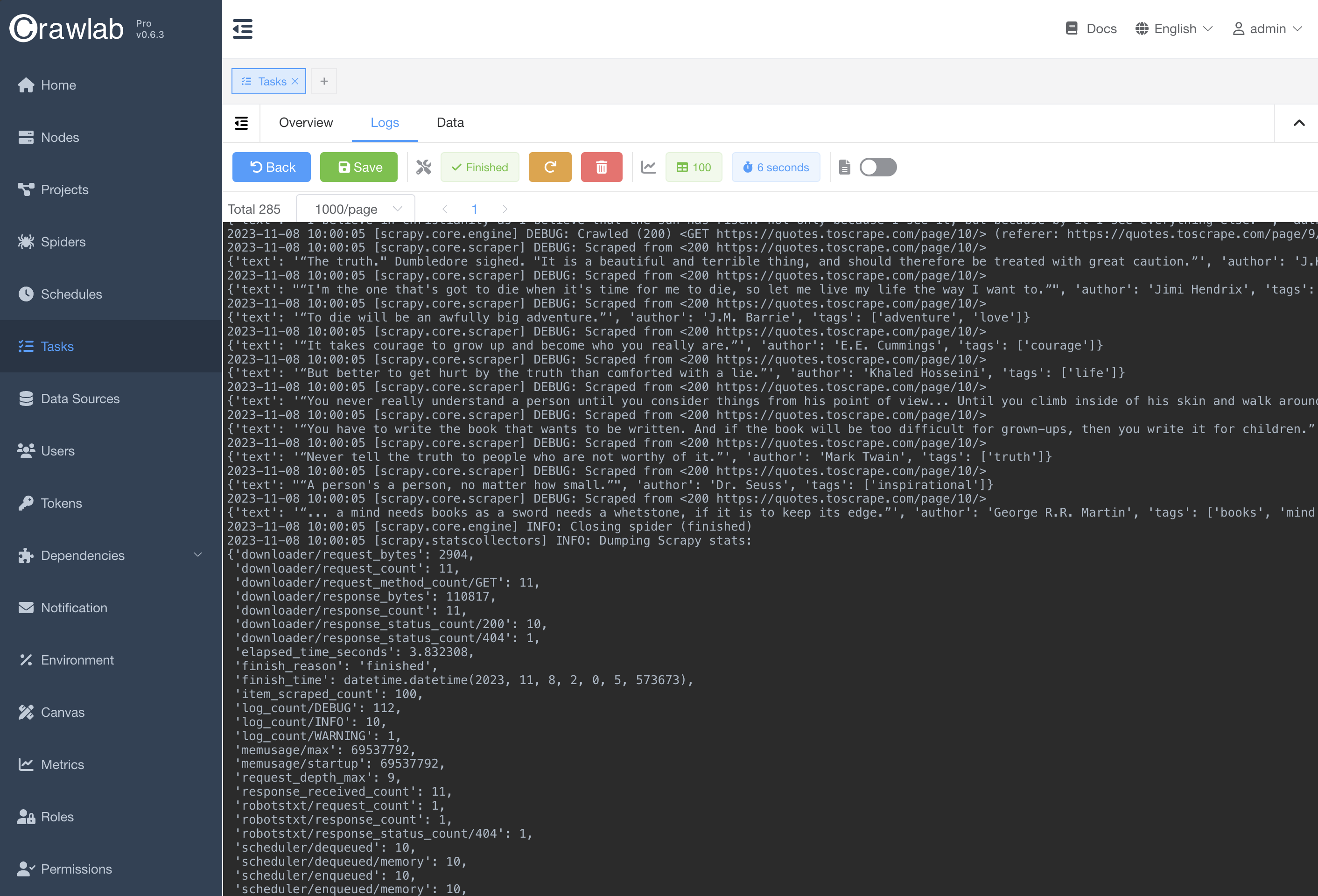

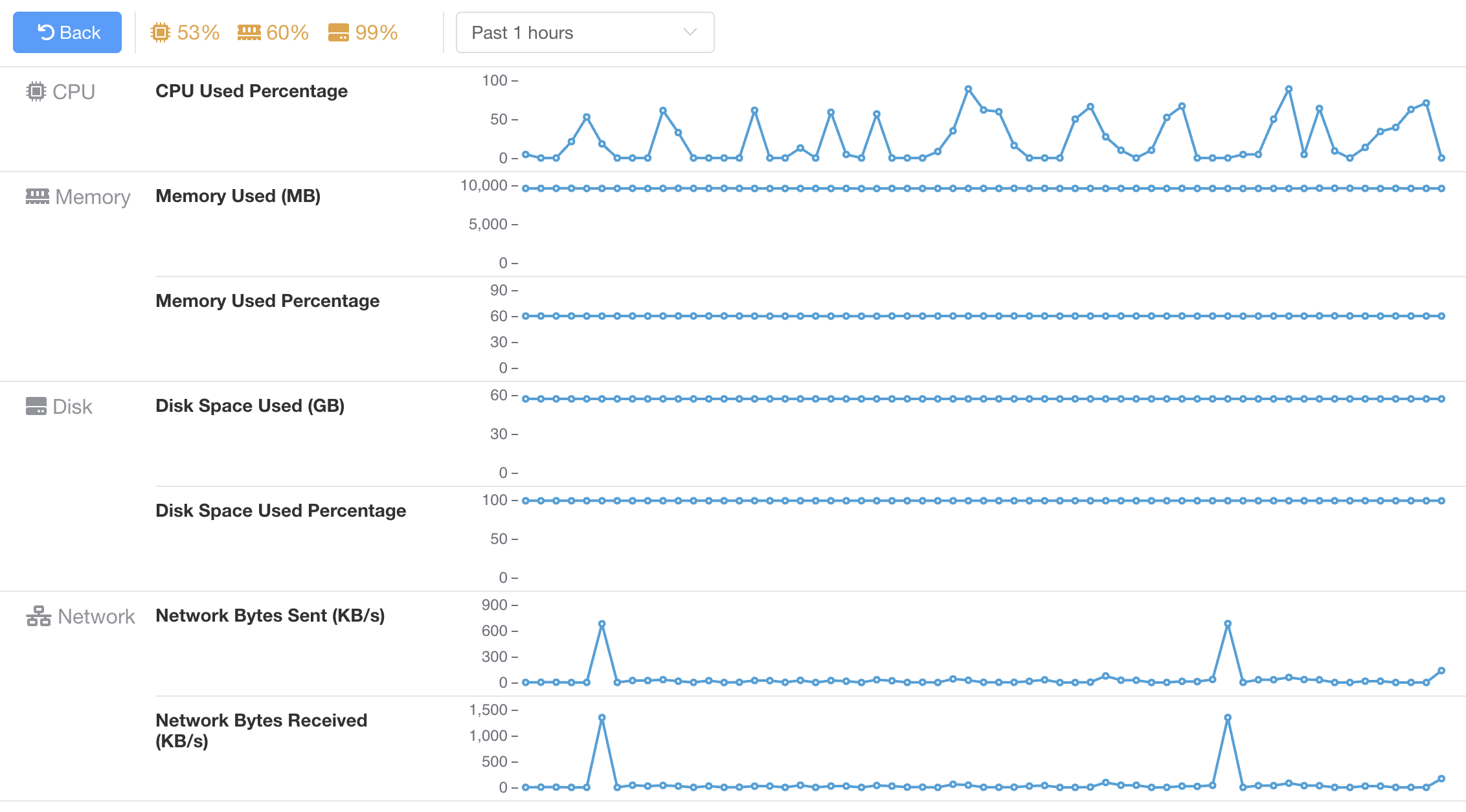

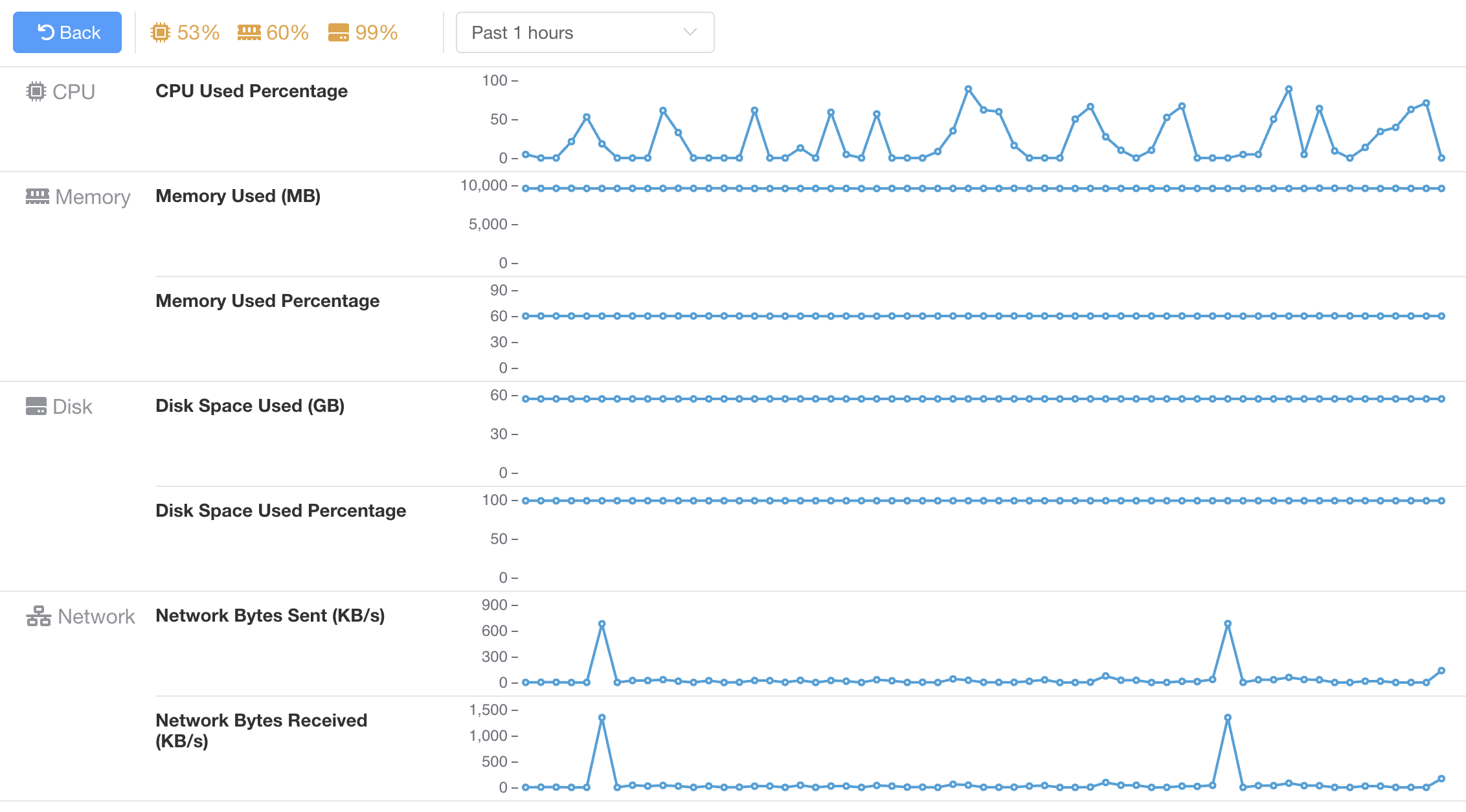

Performance Monitoring

Optimized Insight with Performance Monitoring

Track real-time performance metrics, identify bottlenecks, and optimize operations for peak performance. Our intuitive dashboard provides a comprehensive overview, allowing you to make data-driven decisions swiftly and enhance overall productivity.

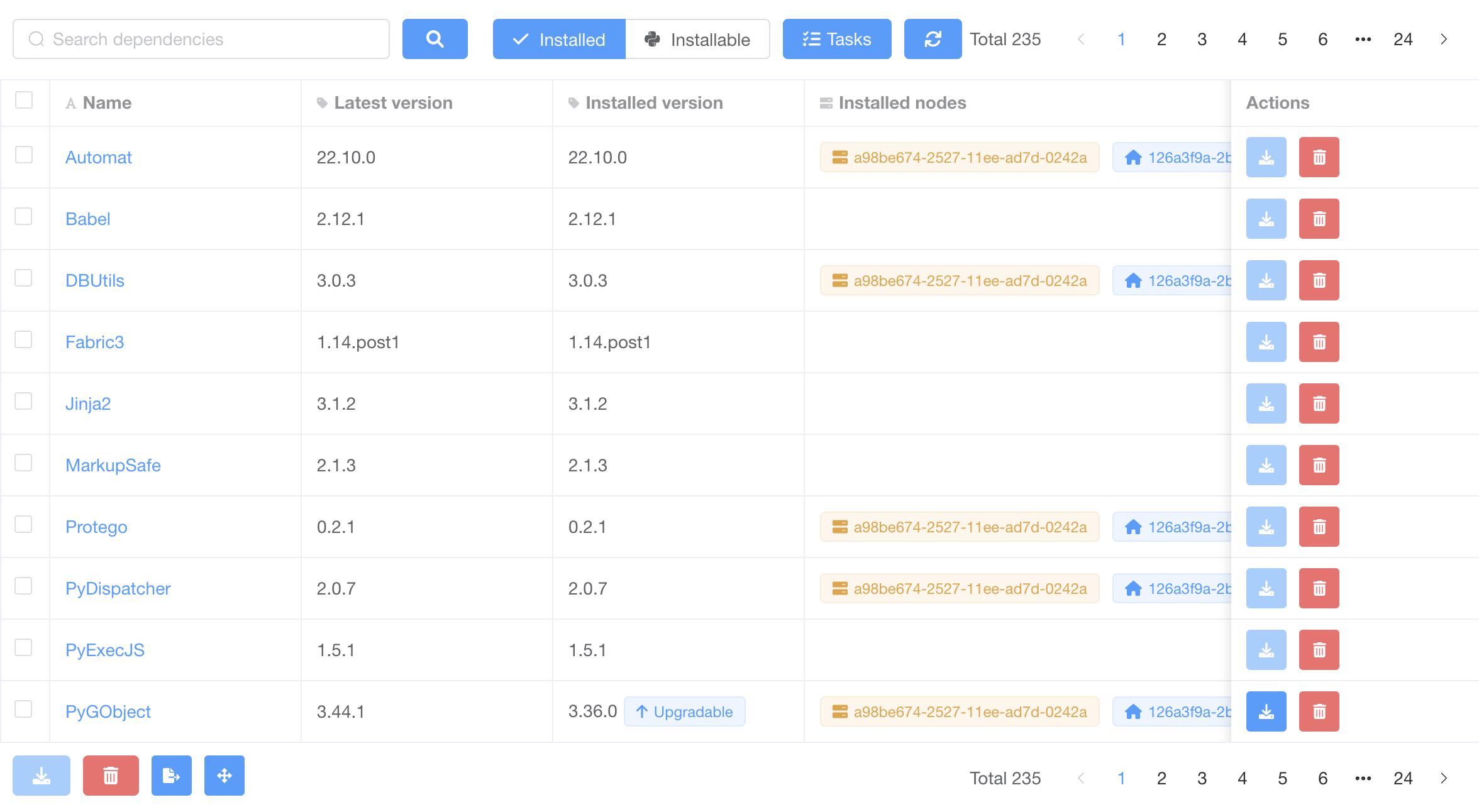

Dependency Management

Streamlined Operations with Advanced Dependency Management

This feature ensures seamless integration and coordination between various components of your projects. It automatically manages and updates dependencies, reducing manual oversight and minimizing the risk of conflicts or errors.

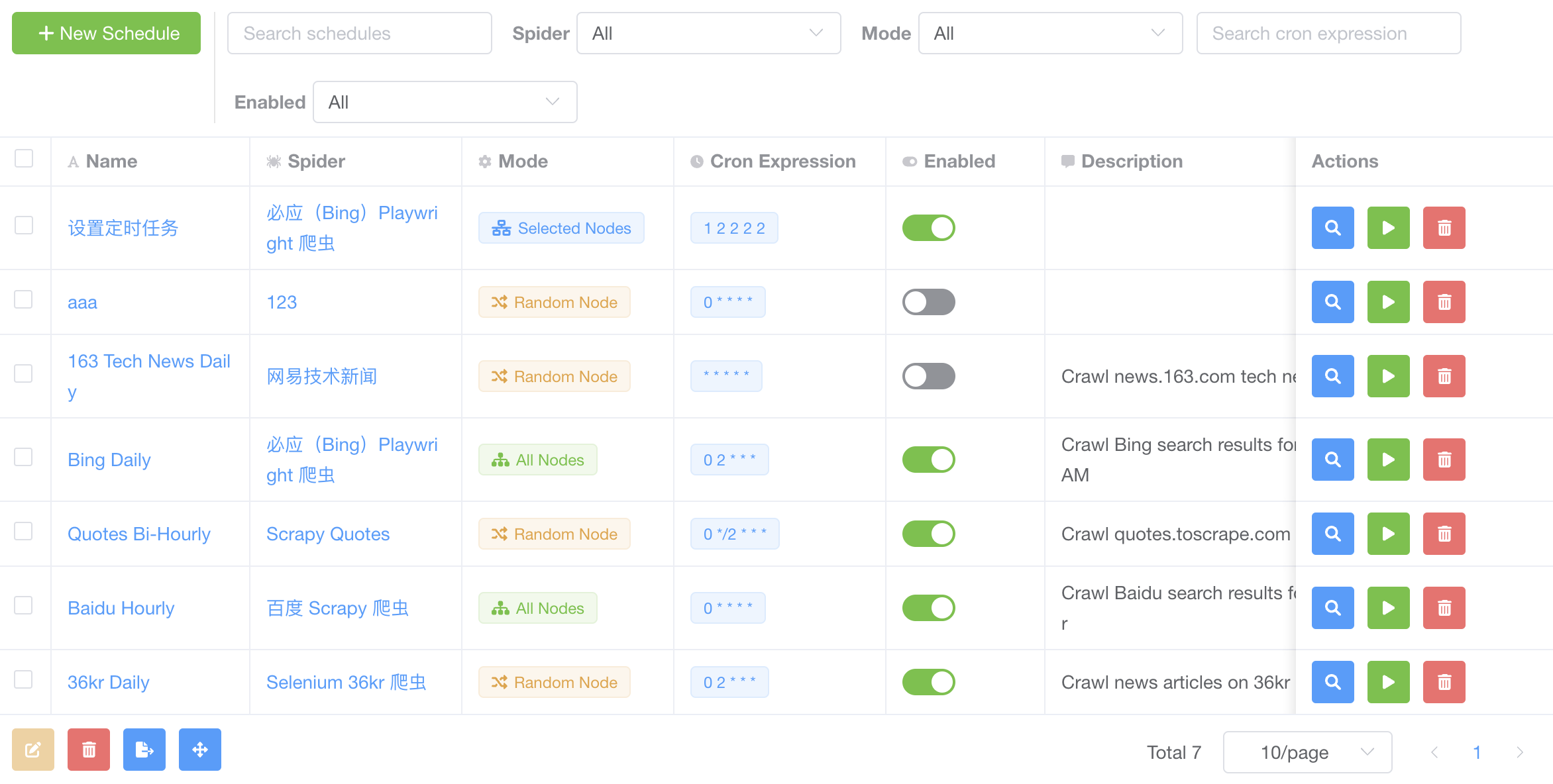

Scheduled Tasks

Efficiency at Your Fingertips: Mastering Scheduled Tasks

Automate routine tasks and set up customized schedules to improve operational efficiency. Enjoy the freedom of automation while maintaining control over your key operations, ultimately boosting productivity and consistency.

Optimized Insight with Performance Monitoring

Track real-time performance metrics, identify bottlenecks, and optimize operations for peak performance. Our intuitive dashboard provides a comprehensive overview, allowing you to make data-driven decisions swiftly and enhance overall productivity.

Streamlined Operations with Advanced Dependency Management

This feature ensures seamless integration and coordination between various components of your projects. It automatically manages and updates dependencies, reducing manual oversight and minimizing the risk of conflicts or errors.

Efficiency at Your Fingertips: Mastering Scheduled Tasks

Automate routine tasks and set up customized schedules to improve operational efficiency. Enjoy the freedom of automation while maintaining control over your key operations, ultimately boosting productivity and consistency.

Get started today

It’s time to take control of your web crawlers. Buy our software so you can feel like you’re doing something productive.

Get startedLoved by businesses worldwide.

Our software is so simple that people can’t help but fall in love with it. Simplicity is easy when you just skip tons of mission-critical features.

Crawlab has streamlined our web scraping projects. Its management platform is intuitive and robust, allowing our team to handle multiple crawlers with ease. It's significantly boosted our productivity.

Samantha T.Web Developer

With Crawlab, managing our data scraping tasks has become a breeze. The ability to monitor and control crawlers in real-time has greatly enhanced our data collection strategies.

Amy H.Data Scientist

Crawlab's platform has transformed how we approach SEO. Managing and analyzing data from various crawlers in one place has made our workflow much more efficient.

Leland K.SEO Specialist

Implementing Crawlab in our IT projects has been a game-changer. Its seamless integration and management capabilities for various web crawlers have made our data gathering processes more streamlined than ever.

Erin P.IT Project Manager

For digital marketing, Crawlab has been invaluable. Coordinating multiple web crawlers for market research is now incredibly straightforward and effective, thanks to its user-friendly interface and powerful features.

Peter R.Digital Marketing Manager

Crawlab has revolutionized how we manage web crawling for our e-commerce analytics. It’s easy to set up, monitor, and manage crawlers, providing us with the timely data we need to make informed decisions.

Derek T.E-commerce Operations Manager

Pricing (Crawlab)

The right price for you, whoever you are

Explore tailored plans that fit your needs and budget.

Enterprise

Yearly payment, cancel any time.

- Basic features

- Unlimited crawling tasks

- Multi-language file editing

- Data export

- Realtime performance monitoring

- RBAC user management

- Priority technical support

- Unlimited nodes

Permanent

Lifetime payment, no additional fee.

- Basic features

- Unlimited crawling tasks

- Multi-language file editing

- Data export

- Realtime performance monitoring

- RBAC user management

- Priority technical support

- Unlimited nodes

- Permanent usage

Crawlab AI Service

We also offer AI-powered data extraction services that can automatically extract data from various websites.

Frequently asked questions

If you can’t find what you’re looking for, email our support team (zhangyeqing@core-digital.cn) and if we will get back to you.

I’m not sure if I need Crawlab, can I try it out before I buy?

Yes, we offer the community edition of Crawlab. You can try it out before you buy.

Can I request an invoice?

Absolutely, we are happy to provide an invoice.

Will technical support be provided?

Yes, we provide technical support for all paid plans.

Does Crawlab support distributed crawling?

Yes, Crawlab supports distributed crawling, and you can deploy it on multiple machines.

How does Crawlab work?

Crawlab is a web-based crawler development and management platform. It provides a web interface for users to develop, run, monitor, and manage crawlers conveniently.

Does Crawlab support Scrapy?

Yes, Crawlab supports Scrapy, and you can use it to crawl data in Scrapy.

Does Crawlab support different programming languages?

Yes, Crawlab supports Python, Node.js, Go, Java, regardless of frameworks.

How to install Crawlab?

You can install Crawlab by following the instructions on the official documentation. It's pretty simple to deploy with Docker.

Can I upgrade Crawlab to a higher version?

Yes, you can upgrade Crawlab to a higher version as long as your plan is within the validity period.